Commerce Tracking with Google Analytics for Android

Posted: 2011-05-05 14:17:05 UTC-07:00

[This post is by Jim Cotugno and Nick Mihailovski, engineers who work on Google Analytics — Tim Bray]Today we released a new version of the Google Analytics Android SDK which includes support for tracking e-commerce transactions. This post walks you through setting it up in your mobile application.Why It’s Important

If you allow users to purchase goods in your application, you’ll want to understand how much revenue your application generates as well as which products are most popular.With the new e-commerce tracking functionality in the Google Analytics Android SDK, this is easy.Before You Begin

In this post, we assume you’ve already configured the Google Analytics Android SDK to work in your application. Check out our SDK docs if you haven’t already.We also assume you have a Google Analytics tracking object instance declared in your code:GoogleAnalyticsTracker tracker;Then in the activity’s onCreate method, you have initialized the tracker member variable and called start:tracker = GoogleAnalyticsTracker.getInstance(); tracker.start("UA-YOUR-ACCOUNT-HERE", 30, this);Setting Up The Code

The best way to track a transaction is when you’ve received confirmation for a purchase. For example, if you have a callback method that is called when a purchase is confirmed, you would call the tracking code there.public void onPurchaseConfirmed(Listpurchases) { // Use Google Analytics to record the purchase information here... } Tracking The Transaction

The Google Analytics Android SDK provides its own Transaction object to store values Google Analytics collects. The next step is to copy the values from the list of PurchaseObjects into a Transaction object.The SDK’s Transaction object uses the builder pattern, where the constructor takes the required arguments and the optional arguments are set using setters:Transaction.Builder builder = new Transaction.Builder( purchase.getOrderId(), purchase.getTotal()) .setTotalTax(purchase.getTotalTax()) .setShippingCost(purchase.getShippingCost() .setStoreName(purchase.getStoreName());You then add the transaction by building it and passing it to a Google Analytics tracking Object:tracker.addTransaction(builder.build());Tracking Each Item

The next step is to track each item within the transaction. This is similar to tracking transactions, using the Item class provided by the Google Analytics SDK for Android. Google Analytics uses the OrderID as a common ID to associate a set of items to it’s parent transaction.Let’s say the PurchaseObject above has a list of one or more LineItem objects. You can then iterate through each LineItem and create and add the item to the tracker.for (ListItem listItem : purchase.getListItems()) { Item.Builder itemBuilder = new Item.Builder( purchase.getOrderId(), listItem.getItemSKU(), listItem.getPrice(), listItem.getCount()) .setItemCategory(listItem.getItemCategory()) .setItemName(listItem.getItemName()); // Now add the item to the tracker. The order ID is the key // Google Analytics uses to associate this item to the transaction. tracker.addItem(itemBuilder.build()); }Sending the Data to Google Analytics

Finally once all the transactions and items have been added to the tracker, you call:tracker.trackTransactions();This sends the transactions to the dispatcher, which will transmit the data to Google Analytics.Viewing The Reports

Once data has been collected, you can then log into the Google Analytics Web Interface and go to the Conversions > Ecommerce > Product Performance report to see how much revenue each product generated.

Here we see that many people bought potions, which generated the most revenue for our application. Also, more people bought the blue sword than the red sword, which could mean we need to stock more blue items in our application. Awesome!Learning More

You can learn more about the new e-commerce tracking feature in the Google Analytics SDK for Androiddeveloper documentation.What’s even better is that we’ll be demoing all this new functionality this year at Google IO, in the Optimizing Android Apps With Google Analytics session.

Merchant Sales Reports on Android Market

Posted: 2011-04-26 09:15:05 UTC-07:00

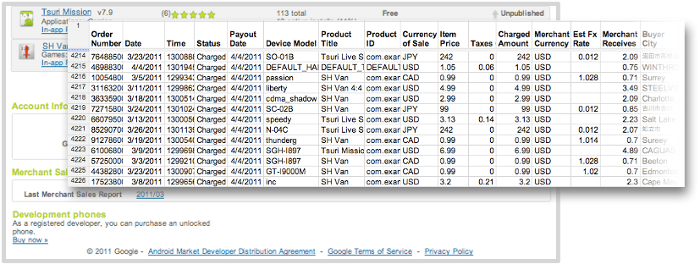

[This post is by Eric Chu, Android Developer Ecosystem. —Dirk Dougherty]As part of our ongoing efforts to provide better tools to help you manage your business, we are introducing merchant sales reporting on Android Market. Developers now have convenient access to monthly reports that detail the financial performance of their applications directly from the Android Market Developer Console.Based on Google Checkout financial data, these reports provide per-transaction details including additional information such as device information, currency of sale, and currency conversion rate. Developers will be able to easily download these reports as a CSV (comma-separated values) files to enable further analysis at their convenience.

Starting today, developers can download merchant sales reports for March 2011 from the Developer Console. Reports for months going back to January 2010 will be available in the coming weeks. Moving forward, sales reports for each month will be available by the 10th day of the following month.We hope you’ll find these new sales reports useful. As always, please don’t hesitate to give us feedback through Market Help Center.

Customizing the Action Bar

Posted: 2011-04-13 14:39:58 UTC-07:00

[This post is by Nick Butcher, an Android engineer who notices small imperfections, and they annoy him. — Tim Bray]Since the introduction of the Action Bar design pattern, many applications have adopted it as a way to provide easy access to common actions. In Android 3.0 (or Honeycomb to its friends) this pattern has been baked in as the default navigation paradigm and extended to take advantage of the extra real-estate available on tablets. By using the Action Bar in your Honeycomb-targeted apps, you'll give your users a familiar way to interact with your application. Also, your app will be better prepared to scale across the range of Android devices that will be arriving starting in the Honeycomb era.Just because Action Bars are familiar, doesn’t mean that they have to be identical! The following code samples and accompanying project demonstrate how to style the Action Bar to match your application’s branding. I’ll demonstrate how to take Honeycomb’sHolo.Lighttheme and customise it to match this blog’s colour scheme.<style name="Theme.AndroidDevelopers" parent="android:style/Theme.Holo.Light"> … </style>Icon

This step is easy; I’ll use the wonderful Android Asset Studio to create an icon in my chosen colour scheme. For extra credit, I’ll use this image as a starting point to create a more branded logo.

Navigation

Next up, the navigation section of the Action Bar operates in three different modes; I’ll tackle each of these in turn.Standard

The Action Bar’s standard navigation mode simply displays the title of the activity. This doesn’t require any styling... next!List

To the left, a standard list drop-down; to the right, the effect we want to achieve.

The default styling in list navigation mode has a blue colour scheme. This is evident when touching the collapsed control in both the top bar, and the selection highlight in the expanded list. We can theme this element by overriding android:actionDropDownStyle with a custom style to implement our colour scheme:<!-- style the list navigation --> <style name="MyDropDownNav" parent="android:style/Widget.Holo.Light.Spinner.DropDown.ActionBar"> <item name="android:background">@drawable/ad_spinner_background_holo_light</item> <item name="android:popupBackground">@drawable/ad_menu_dropdown_panel_holo_light</item> <item name="android:dropDownSelector">@drawable/ad_selectable_background</item> </style>The above uses a combination of state list drawables and 9 patch images to style the collapsed spinner, the top bar of the expanded list and sets the highlight colour when picking an item.Tabs

Here are the before-and-after shots on the tab navigation control:

The tab navigation control uses the standard blue colouring. We can apply a custom style toandroid:actionBarTabStyle to set our own custom drawable that uses our desired palette:<!-- style for the tabs --> <style name="MyActionBarTabStyle" parent="android:style/Widget.Holo.Light.ActionBarView_TabView"> <item name="android:background">@drawable/actionbar_tab_bg</item> <item name="android:paddingLeft">32dp</item> <item name="android:paddingRight">32dp</item> </style>Actions

Before-and-after on the individual items in the Action Bar:

The individual action items inherit the default blue background when selected. We can customise this behaviour by overriding android:selectableItemBackground and setting a shape drawable with our desired colouring:<item name="android:selectableItemBackground">@drawable/ad_selectable_background</item>The overflow menu also needs some attention as when expanded it shows a blue bar at the top of the list. We can override android:popupMenuStyle and set a custom drawable (in fact the very same drawable we previously used for list navigation) for the top of the overflow menu:<!-- style the overflow menu --> <style name="MyPopupMenu" parent="android:style/Widget.Holo.Light.ListPopupWindow"> <item name="android:popupBackground">@drawable/ad_menu_dropdown_panel_holo_light</item> </style>Selecting items within the overflow menu also show the default selection colour. We can set our customised selection colour by overriding android:dropDownListViewStyle:<!-- style the items within the overflow menu --> <style name="MyDropDownListView" parent="android:style/Widget.Holo.ListView.DropDown"> <item name="android:listSelector">@drawable/ad_selectable_background</item> </style>These changes gets us most of the way there but it’s attention to detail that makes an app. Check boxes and radio buttons within menu items in the overflow section are still using the default assets which have a blue highlight. Let’s override them to fit in with our theme:<item name="android:listChoiceIndicatorMultiple">@drawable/ad_btn_check_holo_light</item> <item name="android:listChoiceIndicatorSingle">@drawable/ad_btn_radio_holo_light</item>

Background

I’ve left the background transparent as inheriting form Holo.Light works well for our desired palette. If you’d like to customise it you easily override theandroid:backgrounditem on the android:actionBarStyle style:<style name="MyActionBar" parent="android:style/Widget.Holo.Light.ActionBar"> <item name="android:background">@drawable/action_bar_background</item> </style>Bringing it all together

Putting all of these components together we can create a custom style:<style name="Theme.AndroidDevelopers" parent="android:style/Theme.Holo.Light"> <item name="android:selectableItemBackground">@drawable/ad_selectable_background</item> <item name="android:popupMenuStyle">@style/MyPopupMenu</item> <item name="android:dropDownListViewStyle">@style/MyDropDownListView</item> <item name="android:actionBarTabStyle">@style/MyActionBarTabStyle</item> <item name="android:actionDropDownStyle">@style/MyDropDownNav</item> <item name="android:listChoiceIndicatorMultiple">@drawable/ad_btn_check_holo_light</item> <item name="android:listChoiceIndicatorSingle">@drawable/ad_btn_radio_holo_light</item> </style>We can then apply this style to either an individual activity or to the entire application:<activity android:name=".MainActivity" android:label="@string/app_name" android:theme="@style/Theme.AndroidDevelopers" android:logo="@drawable/ad_logo">Note that some of the system styles that we have overridden in this example will affect much more than the Action Bar. For example overriding android:selectableItemBackground will effect many widgets which support a selectable state. This is useful for styling your entire application but be sure to test that your customisations are applied consistently throughout.Familiar but styled

Customising the action bar is a great way to extend your application’s branding to the standard control components. With this power, as they say, comes great responsibility. When customising the user interface you must take great care to ensure that your application remains legible and navigable. In particular, watch out for highlight colours which contrast poorly with text and provide drawables for all relevant states. Explorethis demo application which exercises the functionality offered by the Action Bar and demonstrates how to theme it.

Android Developer Challenge, Sub-Saharan Africa!

Posted: 2011-04-14 07:56:37 UTC-07:00

[This post is by Bridgette Sexton, an innovation advocate for the African tech community. — Tim Bray]In the past year alone, we have met with over 10,000 developers and techies across Sub Saharan Africa. We are continually impressed by the ingenuity and enthusiasm of this community in solving real problems with technology. From applications that crowd-source traffic info to mobile registration of local businesses, handheld devices have taken center stage for consumers and developers in Africa. With a number of countries in the region hovering around 80-90% mobile penetration, mobile is the screen size for the web and the communication experience.

Correspondingly, at every Google event in Africa, Android is the hottest topic; we know why. Every day over 300,000 Android devices are activated globally! A growing number of these mobile devices are powering on for the first time in emerging markets like those in Africa. As Android users multiply, so does the appeal to for developers of building apps on this free open-source platform.

An increasing number of users are searching for 'Android' on Google in Sub-Saharan AfricaFor all these reasons and more, we are proud to be launching the Android Developer Challenge for Sub-Saharan Africa!The Android Developer Challenge is designed to encourage the creation of cool and innovative Android mobile apps built by developers in Sub-Saharan Africa. Invent apps that delight users and you stand a chance to win an Android phone and $25,000 USD. To get started, choose from one of three defined eligible categories (see below), build an Android app in a team or by yourself, and submit it via the competition website by July 1st. The winning app will be announced on September 12th at G-Kenya. Get more details as well as Terms and Conditions on our site.Categories for Entry:- Entertainment / Media / Games

- Social Networking / Communication

- Productivity / Tools / Lifestyle

(See Terms & Conditions for more details!)To launch this competition, we have teamed up with Google Technology User Groups (GTUGs) across Africa to host Android Developer Challenge events. Check out our website for Android gatherings near you, and get coding!

New Carrier Billing Options on Android Market

Posted: 2011-04-13 11:31:48 UTC-07:00

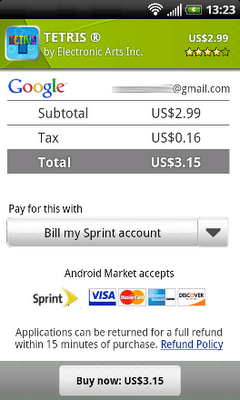

[This post is by Eric Chu, Android Developer Ecosystem. —Dirk Dougherty]Since last year, we’ve been working to bring the convenience of Direct Carrier Billing to more Android Market users on more carrier networks. Building on the launches to T-Mobile US and AT&T users in 2010, we’ve recently launched Direct Carrier Billing to users on three popular networks in Japan -- SoftBank, KDDI, and NTT DOCOMO.The momentum continues and today we’re excited to announce that Direct Carrier Billing is now available on Sprint. We've begun a phased roll-out of the service that will reach all users in the next few days. When complete, Android users on the Sprint network will be able to charge their Android Market purchases to their Sprint mobile bill with only a few clicks.We believe that Direct Carrier Billing is a key payment option because it lets users purchase and pay for apps more easily. It’s also important because it offers a convenient way to buy in regions where credit cards are less common.We are continuing to partner with more carriers around the world to offer carrier billing options to their subscribers. Watch for announcements of new payment options coming in the months ahead.

I think I’m having a Gene Amdahl moment (http://goo.gl/7v4kf)

Posted: 2011-04-07 11:32:07 UTC-07:00

[This post is by Andy Rubin, VP of Engineering —Tim Bray]Recently, there’s been a lot of misinformation in the press about Android and Google’s role in supporting the ecosystem. I’m writing in the spirit of transparency and in an attempt to set the record straight. The Android community has grown tremendously since the launch of the first Android device in October 2008, but throughout we’ve remained committed to fostering the development of an open platform for the mobile industry and beyond.We don’t believe in a “one size fits all” solution. The Android platform has already spurred the development of hundreds of different types of devices – many of which were not originally contemplated when the platform was first created. What amazes me is that even though the quantity and breadth of Android products being built has grown tremendously, it’s clear that quality and consistency continue to be top priorities. Miraculously, we are seeing the platform take on new use cases, features and form factors as it’s being introduced in new categories and regions while still remaining consistent and compatible for third party applications.As always, device makers are free to modify Android to customize any range of features for Android devices. This enables device makers to support the unique and differentiating functionality of their products. If someone wishes to market a device as Android-compatible or include Google applications on the device, we do require the device to conform with some basic compatibility requirements. (After all, it would not be realistic to expect Google applications – or any applications for that matter – to operate flawlessly across incompatible devices). Our “anti-fragmentation” program has been in place since Android 1.0 and remains a priority for us to provide a great user experience for consumers and a consistent platform for developers. In fact, all of the founding members of the Open Handset Alliance agreed not to fragment Android when we first announced it in 2007. Our approach remains unchanged: there are no lock-downs or restrictions against customizing UIs. There are not, and never have been, any efforts to standardize the platform on any single chipset architecture.Finally, we continue to be an open source platform and will continue releasing source code when it is ready. As I write this the Android team is still hard at work to bring all the new Honeycomb features to phones. As soon as this work is completed, we’ll publish the code. This temporary delay does not represent a change in strategy. We remain firmly committed to providing Android as an open source platform across many device types.The volume and variety of Android devices in the market continues to exceed even our most optimistic expectations. We will continue to work toward an open and healthy ecosystem because we truly believe this is best for the industry and best for consumers.

The IO Ticket Contest

Posted: 2011-04-03 13:20:39 UTC-07:00

When Google I/O sold out so fast, were kicking around ideas for how to get some of our ticket reserve into the hands of our favorite people: Dedicated developers. Someone floated the idea of a contest, so we had to pull one together double-quick. You can read the questions and first-round answers here.We thought you would enjoy some statistics, mostly rounded-off:- 2,800 people visited the contest page.

- 360 people tried answering the questions.

- 1 person got all six right.

- 200 people did well enough to get into Round 2.

- 70 people submitted apps.

- 38 of the apps worked well enough to be worth considering.

- 10 apps (exactly) got a “Nice” rating from the first-cut reviewer.

While we’re doing numbers, let’s investigate which of the Round-1 questions were hard. In decreasing order of difficulty, identified by correct answer, we find: Dalvik (97.5% correct), 160 (96%), Looper (58.5%), LLVM (57%),fyiWillBeAdvancedByHostKThx(43%), andPhoneNumberFormattingTextWatcher(19.5%).So, our thanks to the people who put in the work, and a particular tip of the hat to the deranged hackers er I mean creative developers who built three particularly-outstanding apps:First, to Kris Jurgowski, who pulled an all-nighter and wrote a nifty little app... on a Motorola CLIQ running Android 1.5! Next, to Heliodor Jalba, whose app had some gravity-warping extras and was less than 11K in size. And finally, to Charles Vaughn, whose app included a hilarious “Party Mode” that brought a smile to everyone’s face.

Identifying App Installations

Posted: 2011-03-30 14:18:08 UTC-07:00

[The contents of this post grew out of an internal discussion featuring many of the usual suspects who’ve been authors in this space. — Tim Bray]In the Android group, from time to time we hear complaints from developers about problems they’re having coming up with reliable, stable, unique device identifiers. This worries us, because we think that tracking such identifiers isn’t a good idea, and that there are better ways to achieve developers’ goals.Tracking Installations

It is very common, and perfectly reasonable, for a developer to want to track individual installations of their apps. It sounds plausible just to call TelephonyManager.getDeviceId() and use that value to identify the installation. There are problems with this: First, it doesn’t work reliably (see below). Second, when it does work, that value survives device wipes (“Factory resets”) and thus you could end up making a nasty mistake when one of your customers wipes their device and passes it on to another person.To track installations, you could for example use a UUID as an identifier, and simply create a new one the first time an app runs after installation. Here is a sketch of a class named “Installation” with one static methodInstallation.id(Context context). You could imagine writing more installation-specific data into theINSTALLATIONfile.public class Installation { private static String sID = null; private static final String INSTALLATION = "INSTALLATION"; public synchronized static String id(Context context) { if (sID == null) { File installation = new File(context.getFilesDir(), INSTALLATION); try { if (!installation.exists()) writeInstallationFile(installation); sID = readInstallationFile(installation); } catch (Exception e) { throw new RuntimeException(e); } } return sID; } private static String readInstallationFile(File installation) throws IOException { RandomAccessFile f = new RandomAccessFile(installation, "r"); byte[] bytes = new byte[(int) f.length()]; f.readFully(bytes); f.close(); return new String(bytes); } private static void writeInstallationFile(File installation) throws IOException { FileOutputStream out = new FileOutputStream(installation); String id = UUID.randomUUID().toString(); out.write(id.getBytes()); out.close(); } }Identifying Devices

Suppose you feel that for the needs of your application, you need an actual hardware device identifier. This turns out to be a tricky problem.In the past, when every Android device was a phone, things were simpler:TelephonyManager.getDeviceId()is required to return (depending on the network technology) the IMEI, MEID, or ESN of the phone, which is unique to that piece of hardware.However, there are problems with this approach:- Non-phones: Wifi-only devices or music players that don’t have telephony hardware just don’t have this kind of unique identifier.

- Persistence: On devices which do have this, it persists across device data wipes and factory resets. It’s not clear at all if, in this situation, your app should regard this as the same device.

- Privilege:It requires READ_PHONE_STATE permission, which is irritating if you don’t otherwise use or need telephony.

- Bugs: We have seen a few instances of production phones for which the implementation is buggy and returns garbage, for example zeros or asterisks.

Mac Address

It may be possible to retrieve a Mac address from a device’s WiFi or Bluetooth hardware. We do not recommend using this as a unique identifier. To start with, not all devices have WiFi. Also, if the WiFi is not turned on, the hardware may not report the Mac address.Serial Number

Since Android 2.3 (“Gingerbread”) this is available via android.os.Build.SERIAL. Devices without telephony are required to report a unique device ID here; some phones may do so also.ANDROID_ID

More specifically, Settings.Secure.ANDROID_ID. This is a 64-bit quantity that is generated and stored when the device first boots. It is reset when the device is wiped.ANDROID_ID seems a good choice for a unique device identifier. There are downsides: First, it is not 100% reliable on releases of Android prior to 2.2 (“Froyo”). Also, there has been at least one widely-observed bug in a popular handset from a major manufacturer, where every instance has the same ANDROID_ID.Conclusion

For the vast majority of applications, the requirement is to identify a particular installation, not a physical device. Fortunately, doing so is straightforward.There are many good reasons for avoiding the attempt to identify a particular device. For those who want to try, the best approach is probably the use of ANDROID_ID on anything reasonably modern, with some fallback heuristics for legacy devices.

In-app Billing Launched on Android Market

Posted: 2011-03-29 17:44:38 UTC-07:00

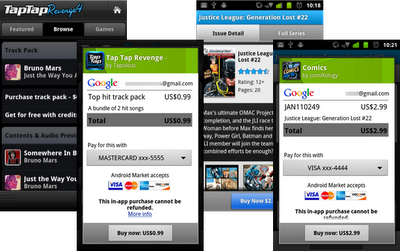

[This post is by Eric Chu, Android Developer Ecosystem. —Dirk Dougherty]Today, we're pleased to announce the launch of Android Market In-app Billing to developers and users. As an Android developer, you will now be able to publish apps that use In-app Billing and your users can make purchases from within your apps.In-app Billing gives you more ways to monetize your apps with try-and-buy, virtual goods, upgrades, and other billing models. If you aren’t yet familiar with In-app Billing, we encourage you to learn more about it.Several apps launching today are already using the service, including Tap Tap Revenge by Disney Mobile;Comics by ComiXology; Gun Bros, Deer Hunter Challenge HD, and WSOP3 by Glu Mobile; and Dungeon Defenders: FW Deluxe by Trendy Entertainment.

To try In-app Billing in your apps, start with the detailed documentation and complete sample appprovided, which show how to implement the service in your app, set up in-app product lists in Android Market, and test your implementation. Also, it’s absolutely essential that you review the security guidelines to make sure your billing implementation is secure.We look forward to seeing how you’ll use this new service in your apps!

In-App Billing on Android Market: Ready for Testing

Posted: 2011-03-24 13:26:29 UTC-07:00

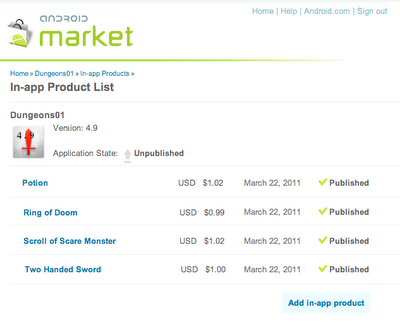

[This post is by Eric Chu, Android Developer Ecosystem. —Dirk Dougherty]Back in January we announced our plan to introduce Android Market In-app Billing this quarter. We're pleased to let you know that we will be launching In-app Billing next week.In preparation for the launch, we are opening up Android Market for upload and end-to-end testing of your apps that use In-app Billing. You can now upload your apps to the Developer Console, create a catalog of in-app products, and set prices for them. You can then set up accounts to test in-app purchases. During these test transactions, the In-app Billing service interacts with your app exactly as it will for actual users and live transactions.Note that although you can upload apps during this test development phase, you won’t be able to actually publish the apps to users until the full launch of the service next week.

To get you started, we’ve updated the developer documentation with information about how to set up product lists and test your in-app products. Also, it is absolutely essential that you review the security guidelines to make sure your billing implementation is secure.We encourage you start uploading and testing your apps right away.

Memory Analysis for Android Applications

Posted: 2011-03-24 12:03:01 UTC-07:00

[This post is by Patrick Dubroy, an Android engineer who writes about programming, usability, and interaction on his personal blog. — Tim Bray]The Dalvik runtime may be garbage-collected, but that doesn't mean you can ignore memory management. You should be especially mindful of memory usage on mobile devices, where memory is more constrained. In this article, we're going to take a look at some of the memory profiling tools in the Android SDK that can help you trim your application's memory usage.Some memory usage problems are obvious. For example, if your app leaks memory every time the user touches the screen, it will probably trigger anOutOfMemoryErroreventually and crash your app. Other problems are more subtle, and may just degrade the performance of both your app (as garbage collections are more frequent and take longer) and the entire system.Tools of the trade

The Android SDK provides two main ways of profiling the memory usage of an app: the Allocation Tracker tab in DDMS, and heap dumps. The Allocation Tracker is useful when you want to get a sense of what kinds of allocation are happening over a given time period, but it doesn't give you any information about the overall state of your application's heap. For more information about the Allocation Tracker, see the article onTracking Memory Allocations. The rest of this article will focus on heap dumps, which are a more powerful memory analysis tool.A heap dump is a snapshot of an application's heap, which is stored in a binary format called HPROF. Dalvik uses a format that is similar, but not identical, to the HPROF tool in Java. There are a few ways to generate a heap dump of a running Android app. One way is to use the Dump HPROF file button in DDMS. If you need to be more precise about when the dump is created, you can also create a heap dump programmatically by using theandroid.os.Debug.dumpHprofData()function.To analyze a heap dump, you can use a standard tool like jhat or the Eclipse Memory Analyzer (MAT). However, first you'll need to convert the .hprof file from the Dalvik format to the J2SE HPROF format. You can do this using thehprof-convtool provided in the Android SDK. For example:hprof-conv dump.hprof converted-dump.hprofExample: Debugging a memory leak

In the Dalvik runtime, the programmer doesn't explicitly allocate and free memory, so you can't really leak memory like you can in languages like C and C++. A "memory leak" in your code is when you keep a reference to an object that is no longer needed. Sometimes a single reference can prevent a large set of objects from being garbage collected.Let's walk through an example using the Honeycomb Gallery sample app from the Android SDK. It's a simple photo gallery application that demonstrates how to use some of the new Honeycomb APIs. (To build and download the sample code, see the instructions.) We're going to deliberately add a memory leak to this app in order to demonstrate how it could be debugged.

Imagine that we want to modify this app to pull images from the network. In order to make it more responsive, we might decide to implement a cache which holds recently-viewed images. We can do that by making a few small changes to ContentFragment.java. At the top of the class, let's add a new static variable:private static HashMap<String,Bitmap> sBitmapCache = new HashMap<String,Bitmap>();This is where we'll cache the Bitmaps that we load. Now we can change theupdateContentAndRecycleBitmap()method to check the cache before loading, and to add Bitmaps to the cache after they're loaded.void updateContentAndRecycleBitmap(int category, int position) { if (mCurrentActionMode != null) { mCurrentActionMode.finish(); } // Get the bitmap that needs to be drawn and update the ImageView. // Check if the Bitmap is already in the cache String bitmapId = "" + category + "." + position; mBitmap = sBitmapCache.get(bitmapId); if (mBitmap == null) { // It's not in the cache, so load the Bitmap and add it to the cache. // DANGER! We add items to this cache without ever removing any. mBitmap = Directory.getCategory(category).getEntry(position) .getBitmap(getResources()); sBitmapCache.put(bitmapId, mBitmap); } ((ImageView) getView().findViewById(R.id.image)).setImageBitmap(mBitmap); }I've deliberately introduced a memory leak here: we add Bitmaps to the cache without ever removing them. In a real app, we'd probably want to limit the size of the cache in some way.Examining heap usage in DDMS

The Dalvik Debug Monitor Server (DDMS) is one of the primary Android debugging tools. DDMS is part of theADT Eclipse plug-in, and a standalone version can also be found in thetools/directory of the Android SDK. For more information on DDMS, see Using DDMS.Let's use DDMS to examine the heap usage of this app. You can start up DDMS in one of two ways:- from Eclipse: click Window > Open Perspective > Other... > DDMS

- or from the command line: run

ddms(or./ddmson Mac/Linux) in thetools/directory

Select the processcom.example.android.hcgalleryin the left pane, and then click the Show heap updates button in the toolbar. Then, switch to the VM Heap tab in DDMS. It shows some basic stats about our heap memory usage, updated after every GC. To see the first update, click the Cause GC button.

We can see that our live set (the Allocated column) is a little over 8MB. Now flip through the photos, and watch that number go up. Since there are only 13 photos in this app, the amount of memory we leak is bounded. In some ways, this is the worst kind of leak to have, because we never get anOutOfMemoryErrorindicating that we are leaking.Creating a heap dump

Let's use a heap dump to track down the problem. Click the Dump HPROF file button in the DDMS toolbar, choose where you want to save the file, and then runhprof-convon it. In this example, I'll be using the standalone version of MAT (version 1.0.1), available from the MAT download site.If you're running ADT (which includes a plug-in version of DDMS) and have MAT installed in Eclipse as well, clicking the “dump HPROF” button will automatically do the conversion (using hprof-conv) and open the converted hprof file into Eclipse (which will be opened by MAT).Analyzing heap dumps using MAT

Start up MAT and load the converted HPROF file we just created. MAT is a powerful tool, and it's beyond the scope of this article to explain all it's features, so I'm just going to show you one way you can use it to detect a leak: the Histogram view. The Histogram view shows a list of classes sortable by the number of instances, theshallow heap (total amount of memory used by all instances), or the retained heap (total amount of memory kept alive by all instances, including other objects that they have references to).

If we sort by shallow heap, we can see that instances ofbyte[]are at the top. As of Android 3.0 (Honeycomb), the pixel data for Bitmap objects is stored in byte arrays (previously it was not stored in the Dalvik heap), and based on the size of these objects, it's a safe bet that they are the backing memory for our leaked bitmaps.Right-click on thebyte[]class and select List Objects > with incoming references. This produces a list of all byte arrays in the heap, which we can sort based on Shallow Heap usage.Pick one of the big objects, and drill down on it. This will show you the path from the root set to the object -- the chain of references that keeps this object alive. Lo and behold, there's our bitmap cache!

MAT can't tell us for sure that this is a leak, because it doesn't know whether these objects are needed or not -- only the programmer can do that. In this case, the cache is using a large amount of memory relative to the rest of the application, so we might consider limiting the size of the cache.Comparing heap dumps with MAT

When debugging memory leaks, sometimes it's useful to compare the heap state at two different points in time. To do this, you'll need to create two separate HPROF files (don't forget to convert them usinghprof-conv).Here's how you can compare two heap dumps in MAT (it's a little complicated):- Open the first HPROF file (using File > Open Heap Dump).

- Open the Histogram view.

- In the Navigation History view (use Window > Navigation History if it's not visible), right click on histogramand select Add to Compare Basket.

- Open the second HPROF file and repeat steps 2 and 3.

- Switch to the Compare Basket view, and click Compare the Results (the red "!" icon in the top right corner of the view).

Conclusion

In this article, I've shown how the Allocation Tracker and heap dumps can give you get a better sense of your application's memory usage. I also showed how The Eclipse Memory Analyzer (MAT) can help you track down memory leaks in your app. MAT is a powerful tool, and I've only scratched the surface of what you can do with it. If you'd like to learn more, I recommend reading some of these articles:- Memory Analyzer News: The official blog of the Eclipse MAT project

- Markus Kohler's Java Performance blog has many helpful articles, including Analysing the Memory Usage of Android Applications with the Eclipse Memory Analyzer and 10 Useful Tips for the Eclipse Memory Analyzer.

Application Stats on Android Market

Posted: 2011-03-17 10:21:04 UTC-07:00

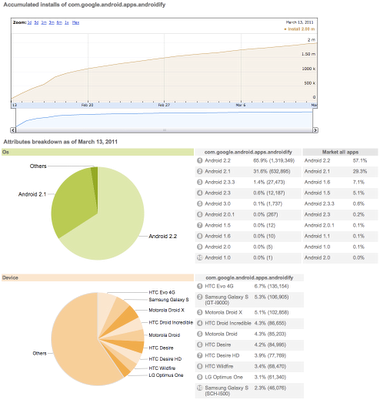

[This post is by Eric Chu, Android Developer Ecosystem. —Dirk Dougherty]On the Android Market team, it’s been our goal to bring you improved ways of seeing and understanding the installation performance of your published applications. We know that this information is critical in helping you tune your development and marketing efforts. Today I’m pleased to let you know about an important new feature that we’ve added to Android Market called Application Statistics.Application Statistics is a new type of dashboard in the Market Developer Console that gives you an overview of the installation performance of your apps. It provides charts and tables that summarize each app’s active installation trend over time, as well as its distribution across key dimensions such as Android platform versions, devices, user countries, and user languages. For additional context, the dashboard also shows the comparable aggregate distribution for all app installs from Android Market (numbering in the billions). You could use this data to observe how your app performs relative to the rest of Market or decide what to develop next.

To start with, we’ve seeded the application Statistics dashboards with data going back to December 22, 2010. Going forward, we’ll be updating the data daily.We encourage you to check out these new dashboards and we hope they’ll give you new and useful insights into your apps’ installation performance. You can access the Statistics dashboards from the main Listings page in the Developer Console.Watch for more announcements soon. We are continuing to work hard to deliver more reporting features to help you manage your products successfully on Android Market.

Android 3.0 Hardware Acceleration

Posted: 2011-03-16 09:47:57 UTC-07:00

[This post is by Romain Guy, who likes things on your screen to move fast. —Tim Bray]One of the biggest changes we made to Android in this release is the addition of a new rendering pipeline so that applications can benefit from hardware accelerated 2D graphics. Hardware accelerated graphics is nothing new to the Android platform, it has always been used for windows composition or OpenGL games for instance, but with this new rendering pipeline applications can benefit from an extra boost in performance. On a Motorola Xoom device, all the standard applications like Browser and Calendar use hardware-accelerated 2D graphics.In this article, I will show you how to enable the hardware accelerated 2D graphics pipeline in your application and give you a few tips on how to use it properly.Go faster

To enable the hardware accelerated 2D graphics, open yourAndroidManifest.xmlfile and add the following attribute to the<application />tag:android:hardwareAccelerated="true"If your application uses only standard widgets and drawables, this should be all you need to do. Once hardware acceleration is enabled, all drawing operations performed on a View's Canvas are performed using the GPU.If you have custom drawing code you might need to do a bit more, which is in part why hardware acceleration is not enabled by default. And it's why you might want to read the rest of this article, to understand some of the important details of acceleration.Controlling hardware acceleration

Because of the characteristics of the new rendering pipeline, you might run into issues with your application. Problems usually manifest themselves as invisible elements, exceptions or different-looking pixels. To help you, Android gives you 4 different ways to control hardware acceleration. You can enable or disable it on the following elements:- Application

- Activity

- Window

- View

To enable or disable hardware acceleration at the application or activity level, use the XML attribute mentioned earlier. The following snippet enables hardware acceleration for the entire application but disables it for one activity:<application android:hardwareAccelerated="true"> <activity ... /> <activity android:hardwareAccelerated="false" /> </application>If you need more fine-grained control, you can enable hardware acceleration for a given window at runtime:getWindow().setFlags( WindowManager.LayoutParams.FLAG_HARDWARE_ACCELERATED, WindowManager.LayoutParams.FLAG_HARDWARE_ACCELERATED);Note that you currently cannot disable hardware acceleration at the window level. Finally, hardware acceleration can be disabled on individual views:view.setLayerType(View.LAYER_TYPE_SOFTWARE, null);Layer types have many other usages that will be described later.Am I hardware accelerated?

It is sometimes useful for an application, or more likely a custom view, to know whether it currently is hardware accelerated. This is particularly useful if your application does a lot of custom drawing and not all operations are properly supported by the new rendering pipeline.There are two different ways to check whether the application is hardware accelerated:View.isHardwareAccelerated(), returns true if the View is attached to a hardware accelerated windowCanvas.isHardwareAccelerated(), returns true if the Canvas is hardware accelerated

If you must do this check in your drawing code, it is highly recommended to useCanvas.isHardwareAccelerated()instead ofView.isHardwareAccelerated(). Indeed, even when a View is attached to a hardware accelerated window, it can be drawn using a non-hardware accelerated Canvas. This happens for instance when drawing a View into a bitmap for caching purpose.What drawing operations are supported?

The current hardware accelerated 2D pipeline supports the most commonly used Canvas operations, and then some. We implemented all the operations needed to render the built-in applications, all the default widgets and layouts, and common advanced visual effects (reflections, tiled textures, etc.) There are however a few operations that are currently not supported, but might be in a future version of Android:- Canvas

clipPathclipRegiondrawPicturedrawPointsdrawPosTextdrawTextOnPathdrawVertices

- Paint

setLinearTextsetMaskFiltersetRasterizer

In addition, some operations behave differently when hardware acceleration enabled:- Canvas

clipRect:XOR,DifferenceandReverseDifferenceclip modes are ignored; 3D transforms do not apply to the clip rectangledrawBitmapMesh: colors array is ignoreddrawLines: anti-aliasing is not supportedsetDrawFilter: can be set, but ignored

- Paint

setDither: ignoredsetFilterBitmap: filtering is always onsetShadowLayer: works with text only

- ComposeShader

- A

ComposeShadercan only contain shaders of different types (aBitmapShaderand aLinearGradientShaderfor instance, but not two instances ofBitmapShader) - A

ComposeShadercannot contain aComposeShader

- A

If drawing code in one of your views is affected by any of the missing features or limitations, you don't have to miss out on the advantages of hardware acceleration for your overall application. Instead, consider rendering the problematic view into a bitmap or setting its layer type toLAYER_TYPE_SOFTWARE. In both cases, you will switch back to the software rendering pipeline.Dos and don'ts

Switching to hardware accelerated 2D graphics is a great way to get smoother animations and faster rendering in your application but it is by no means a magic bullet. Your application should be designed and implemented to be GPU friendly. It is easier than you might think if you follow these recommendations:- Reduce the number of Views in your application: the more Views the system has to draw, the slower it will be. This applies to the software pipeline as well; it is one of the easiest ways to optimize your UI.

- Avoid overdraw: always make sure that you are not drawing too many layers on top of each other. In particular, make sure to remove any Views that are completely obscured by other opaque views on top of it. If you need to draw several layers blended on top of each other consider merging them into a single one. A good rule of thumb with current hardware is to not draw more than 2.5 times the number of pixels on screen per frame (and transparent pixels in a bitmap count!)

- Don't create render objects in draw methods: a common mistake is to create a new Paint, or a new Path, every time a rendering method is invoked. This is not only wasteful, forcing the system to run the GC more often, it also bypasses caches and optimizations in the hardware pipeline.

- Don't modify shapes too often: complex shapes, paths and circles for instance, are rendered using texture masks. Every time you create or modify a Path, the hardware pipeline must create a new mask, which can be expensive.

- Don't modify bitmaps too often: every time you change the content of a bitmap, it needs to be uploaded again as a GPU texture the next time you draw it.

- Use alpha with care: when a View is made translucent using

View.setAlpha(), anAlphaAnimationor anObjectAnimatoranimating the “alpha” property, it is rendered in an off-screen buffer which doubles the required fill-rate. When applying alpha on very large views, consider setting the View's layer type toLAYER_TYPE_HARDWARE.

View layers

Since Android 1.0, Views have had the ability to render into off-screen buffers, either by using a View's drawing cache, or by usingCanvas.saveLayer(). Off-screen buffers, or layers, have several interesting usages. They can be used to get better performance when animating complex Views or to apply composition effects. For instance, fade effects are implemented by usingCanvas.saveLayer()to temporarily render a View into a layer and then compositing it back on screen with an opacity factor.Because layers are so useful, Android 3.0 gives you more control on how and when to use them. To to so, we have introduced a new API calledView.setLayerType(int type, Paint p). This API takes two parameters: the type of layer you want to use and an optional Paint that describes how the layer should be composited. The paint parameter may be used to apply color filters, special blending modes or opacity to a layer. A View can use one of 3 layer types:LAYER_TYPE_NONE: the View is rendered normally, and is not backed by an off-screen buffer.LAYER_TYPE_HARDWARE: the View is rendered in hardware into a hardware texture if the application is hardware accelerated. If the application is not hardware accelerated, this layer type behaves the same asLAYER_TYPE_SOFTWARE.LAYER_TYPE_SOFTWARE: the View is rendered in software into a bitmap

The type of layer you will use depends on your goal:- Performance: use a hardware layer type to render a View into a hardware texture. Once a View is rendered into a layer, its drawing code does not have to be executed until the View calls

invalidate(). Some animations, for instance alpha animations, can then be applied directly onto the layer, which is very efficient for the GPU to do. - Visual effects: use a hardware or software layer type and a Paint to apply special visual treatments to a View. For instance, you can draw a View in black and white using a

ColorMatrixColorFilter. - Compatibility: use a software layer type to force a View to be rendered in software. This is an easy way to work around limitations of the hardware rendering pipeline.

Layers and animations

Hardware-accelerated 2D graphics help deliver a faster and smoother user experience, especially when it comes to animations. Running an animation at 60 frames per second is not always possible when animating complex views that issue a lot of drawing operations. If you are running an animation in your application and do not obtain the smooth results you want, consider enabling hardware layers on your animated views.When a View is backed by a hardware layer, some of its properties are handled by the way the layer is composited on screen. Setting these properties will be efficient because they do not require the view to be invalidated and redrawn. Here is the list of properties that will affect the way the layer is composited; calling the setter for any of these properties will result in optimal invalidation and no redraw of the targeted View:alpha: to change the layer's opacityx,y,translationX,translationY: to change the layer's positionscaleX,scaleY: to change the layer's sizerotation,rotationX,rotationY: to change the layer's orientation in 3D spacepivotX,pivotY: to change the layer's transformations origin

These properties are the names used when animating a View with anObjectAnimator. If you want to set/get these properties, call the appropriate setter or getter. For instance, to modify the alpha property, callsetAlpha(). The following code snippet shows the most efficient way to rotate a View in 3D around the Y axis:view.setLayerType(View.LAYER_TYPE_HARDWARE, null); ObjectAnimator.ofFloat(view, "rotationY", 180).start();Since hardware layers consume video memory, it is highly recommended you enable them only for the duration of the animation. This can be achieved with animation listeners:view.setLayerType(View.LAYER_TYPE_HARDWARE, null); ObjectAnimator animator = ObjectAnimator.ofFloat( view, "rotationY", 180); animator.addListener(new AnimatorListenerAdapter() { @Override public void onAnimationEnd(Animator animation) { view.setLayerType(View.LAYER_TYPE_NONE, null); } }); animator.start();New drawing model

Along with hardware-accelerated 2D graphics, Android 3.0 introduces another major change in the UI toolkit’s drawing model: display lists, which are only enabled when hardware acceleration is turned on. To fully understand display lists and how they may affect your application it is important to also understand how Views are drawn.Whenever an application needs to update a part of its UI, it invokesinvalidate()(or one of its variants) on any View whose content has changed. The invalidation messages are propagated all the way up the view hierarchy to compute the dirty region; the region of the screen that needs to be redrawn. The system then draws any View in the hierarchy that intersects with the dirty region. The drawing model is therefore made of two stages:- Invalidate the hierarchy

- Draw the hierarchy

There are unfortunately two drawbacks to this approach. First, this drawing model requires execution of a lot of code on every draw pass. Imagine for instance your application callsinvalidate()on a button and that button sits on top of a more complex View like aMapView. When it comes time to draw, the drawing code of theMapViewwill be executed even though theMapViewitself has not changed.The second issue with that approach is that it can hide bugs in your application. Since views are redrawn anytime they intersect with the dirty region, a View whose content you changed might be redrawn even thoughinvalidate()was not called on it. When this happens, you are relying on another View getting invalidated to obtain the proper behavior. Needless to say, this behavior can change every time you modify your application ever so slightly. Remember this rule: always callinvalidate()on a View whenever you modify data or state that affects this View’s drawing code. This applies only to custom code since setting standard properties, like the background color or the text in aTextView, will causeinvalidate()to be called properly internally.Android 3.0 still relies oninvalidate()to request screen updates anddraw()to render views. The difference is in how the drawing code is handled internally. Rather than executing the drawing commands immediately, the UI toolkit now records them inside display lists. This means that display lists do not contain any logic, but rather the output of the view hierarchy’s drawing code. Another interesting optimization is that the system only needs to record/update display lists for views marked dirty by aninvalidate()call; views that have not been invalidated can be redrawn simply by re-issuing the previously recorded display list. The new drawing model now contains 3 stages:- Invalidate the hierarchy

- Record/update display lists

- Draw the display lists

With this model, you cannot rely on a View intersecting the dirty region to have itsdraw()method executed anymore: to ensure that a View’s display list will be recorded, you must callinvalidate(). This kind of bug becomes very obvious with hardware acceleration turned on and is easy to fix: you would see the previous content of a View after changing it.Using display lists also benefits animation performance. In the previous section, we saw that setting specific properties (alpha, rotation, etc.) does not require invalidating the targeted View. This optimization also applies to views with display lists (any View when your application is hardware accelerated.) Let’s imagine you want to change the opacity of aListViewembedded inside aLinearLayout, above aButton. Here is what the (simplified) display list of theLinearLayoutlooks like before changing the list’s opacity:DrawDisplayList(ListView) DrawDisplayList(Button)After invokinglistView.setAlpha(0.5f)the display list now contains this:SaveLayerAlpha(0.5) DrawDisplayList(ListView) Restore DrawDisplayList(Button)The complex drawing code ofListViewwas not executed. Instead the system only updated the display list of the much simplerLinearLayout. In previous versions of Android, or in an application without hardware acceleration enabled, the drawing code of both the list and its parent would have to be executed again.It’s your turn

Enabling hardware accelerated 2D graphics in your application is easy, particularly if you rely solely on standard views and drawables. Just keep in mind the few limitations and potential issues described in this document and make sure to thoroughly test your application!

Renderscript Part 2

Posted: 2011-03-10 16:18:59 UTC-08:00

[This post is by R. Jason Sams, an Android engineer who specializes in graphics, performance tuning, and software architecture. —Tim Bray]In Introducing Renderscript I gave a brief overview of this technology. In this post I’ll look at “compute” in more detail. In Renderscript we use “compute” to mean offloading of data processing from Dalvik code to Renderscript code which may run on the same or different processor(s).Renderscript’s Design Goals

Renderscript has three primary goals, given here from most to least important.Portability: Application code needs to be able to run across all devices, even those with radically different hardware. ARM currently comes in several variants — with and without VFP, with and without NEON, and with various register counts. Beyond ARM, there are other CPU architectures like x86, several GPU architectures, and even more DSP architectures.Performance: The second objective is to get as much performance as possible within the constraints of Portability. For Renderscript to make sense we need to achieve much greater performance than established solutions.Usability: The third goal is to simplify development as much as possible. Where possible we automate steps to avoid glue code and other developer busy work.Those three goals lead to several design trade-offs. It’s these trade-offs that separate Renderscript from the existing approaches on the device, such as Dalvik or the NDK. They should be thought of as different tools intended to solve different problems.Core Design Choices

The first choice that needed to be made is what language should be used. When it comes to languages there are almost unlimited options. Shading style languages, C, and C++ were considered. In the end the shading style languages were ruled out due to the need to be able to manipulate data structures for graphical applications such as scene graphs. The lack of pointers and recursion were considered crippling limitations for usability. C++ on the other hand was very desirable but ran into issues with portability. The advanced C++ features are very difficult to run on non-cpu hardware. In the end we chose to base Renderscript on C99 because it offers equal performance to the other choices, is very well understood by developers, and poses no issues running on a wide range of hardware.The next design trade-off was work flow. Specifically we focused on how to convert source code to machine code. We explored several options and actually implemented two different solutions during the development of Renderscript. The older versions (Eclair through Gingerbread) compiled the C source code all the way to machine code on the device. While this had some nice properties such as the ability for applications to generate source on the fly it turned out to be a usability problem. Having to compile your app, install it, run it, then find your syntax error was painful. Also the weaker CPU in devices limited the static analysis and scope of optimizations that could be done.Then we switched to LLVM, moving to a model where scripts are compiled and analyzed on the host using a modified version of clang. We perform high level optimizations at this stage, then emit LLVM bitcode. The translation of the intermediate bitcode to machine code still happens on the device (along with additional device-specific optimizations).Our last big trade-off for compute was thread launching. The trade-off here is between performance and portability. Given sufficient knowledge, existing compute solutions allow a developer to tune an application for a specific hardware platform to the detriment of others. Given unlimited time and resources developers could tune for every hardware combination. While testing and tuning a variety of devices is never bad, no amount of work allows them to tune for unreleased hardware they don’t yet have. A more portable solution places the tuning burden on the runtime, providing greater average performance at the cost of peak performance. Given that the number one goal was portability we chose to place this burden on the runtime.A secondary effect of choosing runtime thread-launch management is that dynamic decisions can be made about where to run a script. For example, some compute hardware can support pointers and recursion while others cannot. We could have chosen to disallow these things and give developers a lowest common denominator API, but we chose to instead let the runtime analyze the scripts. This allows developers to get full use of hardware that supports these features, since there is always a fully featured CPU to fall back upon. In the end, developers can focus on writing good apps and the hardware manufacturers can compete on making the most fully featured and efficient hardware. As new features appear, applications will benefit without application code changes.Usability was a major driver in Renderscript’s design. Most existing compute and graphics platforms require elaborate glue logic to tie the high performance code back to the core application code. This code is very bug prone and usually painful to write. The static analysis we do in the host Renderscript compiler is helpful in solving this issue. Each user script generates a Dalvik “glue” class. Names for the glue class and its accessors are derived from the contents of the script. This greatly simplifies the use of the scripts from Dalvik.Example: The Application Level

Given these trade-offs, what does a simple compute application look like? In this very basic example we will take a normal android.graphics.Bitmap object and run a script that copies it to a second bitmap, converting it to monochrome along the way. Let’s look at the application code which invokes the script before we look at the script itself; this comes from the HelloCompute SDK sample:private Bitmap mBitmapIn; private Bitmap mBitmapOut; private RenderScript mRS; private Allocation mInAllocation; private Allocation mOutAllocation; private ScriptC_mono mScript; private void createScript() { mRS = RenderScript.create(this); mInAllocation = Allocation.createFromBitmap(mRS, mBitmapIn, Allocation.MipmapControl.MIPMAP_NONE, Allocation.USAGE_SCRIPT); mOutAllocation = Allocation.createTyped(mRS, mInAllocation.getType()); mScript = new ScriptC_mono(mRS, getResources(), R.raw.mono); mScript.set_gIn(mInAllocation); mScript.set_gOut(mOutAllocation); mScript.set_gScript(mScript); mScript.invoke_filter(); mOutAllocation.copyTo(mBitmapOut); }This function assumes that the two bitmaps have already been created and are of the same size and format.The first thing all Renderscript applications need is a context object. This is the core object used to create and manage all other Renderscript objects. This first line of the function creates the object,mRS. This object must be kept alive for the duration the application intends to use it or any objects created with it.The next two function calls create compute allocations from the Bitmaps. Renderscript has its own memory allocator, because the memory may potentially be shared by multiple processors and possibly exist in more than one memory space. When an allocation is created its potential uses need to be enumerated so the system may choose the correct type of memory for its intended uses.The first functioncreateFromBitmap()creates a matching Renderscript allocation object and copies the contents of the bitmap into the allocation. Allocations are the basic units of memory used in renderscript. The second Allocation created withcreateTyped()generates an Allocation identical in structure to the first. The definition of that structure is retrieved from the first with thegetType()query. Renderscript types define the structure of an Allocation. In this case the type was generated from the height, width, and format of the incoming bitmap.The next line loads the script, which is named “mono.rs”.R.raw.monoidentifies it; scripts are stored as raw resources in an application’s APK. Note the name of the auto-generated “glue” class,ScriptC_mono.The next three lines set properties of the script, using generated methods in the “glue” class.Now we have everything set up. The functioninvoke_filter()actually does some work for us. This causes the functionfilter()in the script to be called. If the function had parameters they could be passed here. Return values are not allowed as invocations are asynchronous.The last line of the function copies the result of our compute script back to the managed bitmap; it has the necessary synchronization code built-in to ensure the script has completed running.Example: The Script

Here’s the Renderscript stored in mono.rs which the application code above invokes:#pragma version(1) #pragma rs java_package_name(com.android.example.hellocompute) rs_allocation gIn; rs_allocation gOut; rs_script gScript; const static float3 gMonoMult = {0.299f, 0.587f, 0.114f}; void root(const uchar4 *v_in, uchar4 *v_out, const void *usrData, uint32_t x, uint32_t y) { float4 f4 = rsUnpackColor8888(*v_in); float3 mono = dot(f4.rgb, gMonoMult); *v_out = rsPackColorTo8888(mono); } void filter() { rsForEach(gScript, gIn, gOut, 0); }The first line is simply an indication to the compiler which revision of the native Renderscript API the script is written against. The second line controls the package association of the generated reflected code.The three globals listed correspond to the globals which were set up in our managed code. The fourth global is not reflected because it is marked as static. Non-static, const, globals are also allowed but only generate a get reflected method. This can be useful for keeping constants in sync between scripts and managed code.The functionroot()is special to renderscript. Conceptually it’s similar tomain()in C. When a script is invoked by the runtime, this is the function that will be called. In this case the parameters are the incoming and outgoing pixels from our allocation. A generic user pointer is also provided along with the address within the allocation that this invocation is processing. This example ignores these parameters.The three lines of the root function unpack the pixel from RGBA_8888 to a vector of four floats. The second line uses a built-in math function to compute the dot product of the monochrome constants with the incoming pixel data to get our grey level. Note that while dot returns a single float it can be assigned to a float3 which simply copies the value to each of the x, y, and z components of the float3. In the end we use another builtin to repack the floats back to a 32 bit pixel. This is also an example of an overloaded function as there are separate versions ofrsPackColorTo8888which take RGB (float3) or RGBA (float4) data. If A is not provided the overloaded functions assume a value of 1.0f.Thefilter()function is called from managed code to do the conversion. It simply does a compute launch on each element of the allocation. The first parameter is the script to be launched - the root function of this script will be invoked for each element in the allocation. The second and third parameters are the input and output data allocations. The last parameter is the pointer for the user data if we desired to pass additional information to the root function.The forEach will launch across multiple threads if the device has multiple processors. In the future forEach can provide a transition point where control may pass from one processor to another. In this example it is reasonable to expect that in the future filter() would get executed on the CPU and root() would occur on a GPU or DSP.I hope this gives glimpse into the design behind Renderscript and a simple example of how it can be used.

Fragments For All

Posted: 2011-03-03 14:10:23 UTC-08:00

[This post is by Xavier Ducrohet, Android SDK Tech Lead. — Tim Bray]A few weeks ago, Dianne Hackborn wrote about the new Fragments API, a mechanism that makes it easier for applications to scale across a variety of screen sizes.However, as Dianne noted, this new API, which is part of Honeycomb, does not help developers whose applications target earlier versions of Android.Today we’ve released a static library that exposes the same Fragments API (as well as the newLoaderManager and a few other classes) so that applications compatible with Android 1.6 or later can use fragments to create tablet-compatible user interfaces.This library is available through the SDK Updater; it’s called “Android Compatibility package”.

Heading for GDC

Posted: 2011-02-27 14:47:26 UTC-08:00

[This post is by Chris Pruett, who writes regularly about games here, and is obviously pretty cranked about this conference. — Tim Bray]Android will descend in force upon the Game Developers Conference in San Francisco this week; we’re offering a full day packed with sessions covering everything you need to know to build games on Android.

From 10 AM to 5 PM on Tuesday the 1st, North Hall Room 121 will be ground zero for Android Developer Day, with five engineering-focused sessions on everything from compatibility to native audio and graphics. Here's a quick overview; there’s more on the Game Developer Conference site:- Building Aggressively Compatible Android Games — Chris Pruett

- C++ On Android Just Got Better: The New NDK — Daniel Galpin and Ian Ni-Lewis

- OpenGL ES 2.0 on Android: Building Google Body — Nico Weber

- Android Native Audio — Glenn Kasten and Jean-Michel Trivi

- Evading Pirates and Stopping Vampires Using License Server, In App Billing, and AppEngine — Daniel Galpin and Trevor Johns

Our crack team of engineers and advocates spend their nights devising new ways to bring high-end game content to Android, and a full day of sessions just wasn't enough to appease them. So in addition, you can find incisive Android insight in other tracks:- REPLICA ISLAND: Building a Successful Android Game — Chris Pruett (1:45 PM Monday, Smartphone Summit)

- Publishing Games Through Android Market — Eric Chu (1:30 PM Wednesday, Main Track)

Finally, you can visit us in the Google booth on the GDC Expo floor; stop by, fondle the latest devices, and check out the awesome games that are already running on them. We're foaming at the mouth with excitement about the Game Developers Conference next week, and you should be too.Hope to see you there!

Animation in Honeycomb

Posted: 2011-02-24 14:54:21 UTC-08:00

[This post is by Chet Haase, an Android engineer who specializes in graphics and animation, and who occasionally posts videos and articles on these topics on his CodeDependent blog at graphics-geek.blogspot.com. — Tim Bray]One of the new features ushered in with the Honeycomb release is a new animation system, a set of APIs in a whole new package (android.animation) that makes animating objects and properties much easier than it was before."But wait!" you blurt out, nearly projecting a mouthful of coffee onto your keyboard while reading this article, "Isn't there already an animation system in Android?"Animation Prior to Honeycomb

Indeed, Android already has animation capabilities: there are several classes and lots of great functionality in theandroid.view.animationpackage. For example, you can move, scale, rotate, and fade Views and combine multiple animations together in anAnimationSetobject to coordinate them. You can specify animations in aLayoutAnimationControllerto get automatically staggered animation start times as a container lays out its child views. And you can use one of the manyInterpolatorimplementations likeAccelerateInterpolatorandBounceto get natural, nonlinear timing behavior.But there are a couple of major pieces of functionality lacking in the previous system.For one thing, you can animate Views... and that's it. To a great extent, that's okay. The GUI objects in Android are, after all, Views. So as long as you want to move a Button, or a TextView, or a LinearLayout, or any other GUI object, the animations have you covered. But what if you have some custom drawing in your view that you'd like to animate, like the position of a Drawable, or the translucency of its background color? Then you're on your own, because the previous animation system only understands how to manipulate View objects.The previous animations also have a limited scope: you can move, rotate, scale, and fade a View... and that's it. What about animating the background color of a View? Again, you're on your own, because the previous animations had a hard-coded set of things they were able to do, and you could not make them do anything else.Finally, the previous animations changed the visual appearance of the target objects... but they didn't actually change the objects themselves. You may have run into this problem. Let's say you want to move a Button from one side of the screen to the other. You can use aTranslateAnimationto do so, and the button will happily glide along to the other side of the screen. And when the animation is done, it will gladly snap back into its original location. So you find thesetFillAfter(true)method onAnimationand try it again. This time the button stays in place at the location to which it was animated. And you can verify that by clicking on it - Hey! How come the button isn't clicking? The problem is that the animation changes where the button is drawn, but not where the button physically exists within the container. If you want to click on the button, you'll have to click the location that it used to live in. Or, as a more effective solution (and one just a tad more useful to your users), you'll have to write your code to actually change the location of the button in the layout when the animation finishes.It is for these reasons, among others, that we decided to offer a new animation system in Honeycomb, one built on the idea of "property animation."Property Animation in Honeycomb

The new animation system in Honeycomb is not specific to Views, is not limited to specific properties on objects, and is not just a visual animation system. Instead, it is a system that is all about animating values over time, and assigning those values to target objects and properties - any target objects and properties. So you can move a View or fade it in. And you can move a Drawable inside a View. And you can animate the background color of a Drawable. In fact, you can animate the values of any data structure; you just tell the animation system how long to run for, how to evaluate between values of a custom type, and what values to animate between, and the system handles the details of calculating the animated values and setting them on the target object.Since the system is actually changing properties on target objects, the objects themselves are changed, not simply their appearance. So that button you move is actually moved, not just drawn in a different place. You can even click it in its animated location. Go ahead and click it; I dare you.I'll walk briefly through some of the main classes at work in the new system, showing some sample code when appropriate. But for a more detailed view of how things work, check out the API Demos in the SDK for the new animations. There are many small applications written for the new Animations category (at the top of the list of demos in the application, right before the word App. I like working on animation because it usually comes first in the alphabet).In fact, here's a quick video showing some of the animation code at work. The video starts off on the home screen of the device, where you can see some of the animation system at work in the transitions between screens. Then the video shows a sampling of some of the API Demos applications, to show the various kinds of things that the new animation system can do. This video was taken straight from the screen of a Honeycomb device, so this is what you should see on your system, once you install API Demos from the SDK.